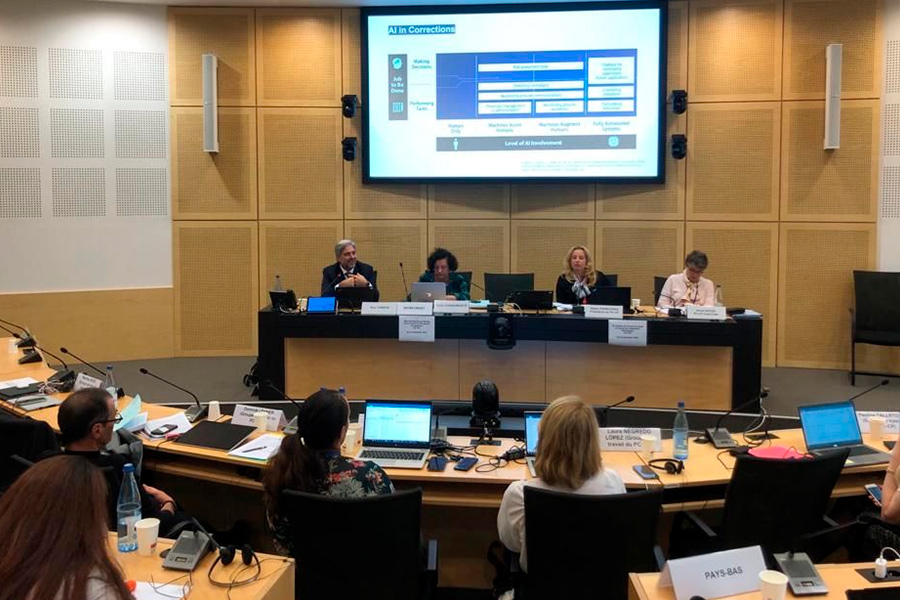

Joint Interview

Council for Penological Co-operation of the Council of Europe

Ilina Taneva (Secretary), Håkan Klarin, Pia Puolakka & Fernando Miró Llinares (Scientific Experts)

The Council for Penological Co-operation (PC-CP) is the Council of Europe’s expert committee dedicated to shaping standards and principles in the field of the execution of custodial and non-custodial penal sanctions and measures. The PC-CP is currently preparing a draft Recommendation on the Ethical and Organisational Aspects of the Use of Artificial Intelligence and related Digital Technologies by Prison and Probation Services.

The document is to be endorsed by the European Committee on Crime Problems (CDPC) and eventually formally adopted by the Committee of Ministers of the Council of Europe. This Recommendation is to provide guidance to administrations considering their use and the private companies developing them.

What prompted the need to develop specific recommendations

on the use of artificial intelligence (AI) in prisons and probation?

IT: The European Committee on Crime Problems (CDPC), which is our steering committee, has decided that artificial intelligence should be on our agenda.

Several Council of Europe bodies are now dealing with artificial intelligence as part of their mandate. Guidelines for courts and prosecutors have already been adopted, and a framework convention is currently being developed. The convention is expected to be finalised by the end of the year and will provide a general framework for the use of artificial intelligence.

In addition, the CDPC is working on a legal instrument for automated driving vehicles and criminal liability, which is also related to AI.

The Council of Europe is actively working on various aspects of artificial intelligence. Depending on the area, more detailed standards may be developed in addition to the framework convention.

PP: Prison and probation services work with a very vulnerable and marginalised population, so, it’s particularly important to have ethical guidelines for using AI. The regulation of AI is a very debated topic in all fields, but it should be especially important in corrections.

FM: When talking about artificial intelligence, you will find some radical views, either very dystopian or very utopian, about how it is going to solve everything or how it is going to be the worst thing that has ever happened to prison and probation services. We have to be very realistic about what can be offered, but also about the challenges that come with it.

The Council of Europe is realising that artificial intelligence and related technologies are already being used in some prisons, and criminal justice systems as a whole. This kind of ground-breaking technology is developing so fast that if you do not regulate it or establish some ethical recommendations, it will be created and used without them. That’s why we need to regulate it now.

HK: It’s a good thing that we are writing recommendations this early in the technology development cycle. We are standing on the threshold of a new movement to introduce technology and digitisation from the inmates’ perspective. Digital services in the prison context are currently being used on a global scale, and you can already see good examples of their use. But they’re only being used with limited application.

As we move forward, we are going to see a lot of new technologies and smarter ways of using them. This will also lead to the introduction of AI, whether we like it or not, because many of the underlying technologies use machine learning and related technologies.

It’s vital for us to have regulations and recommendations that outline what should and should not be done. It also serves as a guide for practitioners and vendors to learn how digital technologies and AI could be used in the justice sector. By introducing recommendations at this stage, we have the chance to roll-out these technologies in the right way and maximise their positive effects.

When talking about artificial intelligence, you will find some radical views, either very dystopian or very utopian, about how it is going to solve everything or how it is going to be the worst thing that has ever happened to prison and probation services. We have to be very realistic about what can be offered, but also about the challenges that come with it.

What has been the approach to developing recommendations for an ever-evolving and multi-faceted application of technology?

IT: The recommendations focus on addressing the challenges and advantages of utilising AI in prison and probation. They are designed to be broad enough to be helpful to all prison and probation services, regardless of their current level of AI adoption.

The main objective is to provide guidance on how to use AI in a responsible and safe manner, while also keeping in mind that the AI field is rapidly evolving.

We recommend that technology should replace repetitive, everyday tasks that are not crucial for rehabilitation purposes, such as opening and closing doors or distributing food. It should be designed to support staff and not replace them or undermine their role.

What are the main concerns that the recommendations address?

IT: We are mostly concerned with how this technology can affect human rights and freedoms. One of the major issues is data protection. Artificial intelligence can be very intrusive. We do not yet know how far this intrusiveness can go and how it can negatively impact the protection of human rights.

Another important concern is that we don’t want to replace human contact with artificial intelligence because as evidence has shown human relations can positively influence offenders.

As such, we recommend that technology should replace repetitive, everyday tasks that are not crucial for rehabilitation purposes, such as opening and closing doors or distributing food. It should be designed to support staff and not replace them or undermine their role. In addition, we recommend that private companies adapt their artificial intelligence tools to the needs of staff and ensure that professionals remain in control as much as possible.

FM: The main goal is to not lose sight of the prison and probation goals in the process of digitalisation. It’s very important that we maintain the philosophy of the prison system at the centre of the design and development of AI.

Therefore, the focus on rehabilitation and the guiding principles of prison and probation should remain a priority. In that sense, AI should only be used when necessary and when it actually improves something, rather than being implemented just because it’s available.

PP: It’s important to remember that AI is not meant to replace human decision-making but rather to support it. For instance, in the corrections field, AI can be used to a certain extent for security purposes and to assess clients and processes.

But ultimately, humans should be making the decisions. This is particularly crucial when working with vulnerable populations, as it is important to maintain a robust human component in these interactions.

It's important to remember that AI is not meant to replace human decision-making but rather to support it.

HK: One of the key recommendations I would highlight is the creation of a transparent development process that can guide the agency throughout the project. This includes good governance and best practices to identify and address any hurdles or problems that may arise. This is particularly critical when working with new technologies, to ensure a smooth and successful outcome.

Transparency is central when addressing the use of AI in the prison and probation context. The process of designing, developing, and using AI should also be open to public scrutiny and be regularly monitored.

This principle should be considered early in the development process, as open design promotes trust and accountability.

What is the importance of bridging the current gap between digital and AI literacy in the industry and the increasing pace of development of these technologies?

PP: Staff using AI need to understand the fundamentals and logic of this technology. They must be aware of why they are doing what they are doing and what is actually happening inside the so-called black box of AI.

These projects are usually led by ICT experts and most staff don’t have enough technical knowledge. However, prison and probation officers are the ones most in need of training on how to use AI ethically.

Unless staff are aware of how these systems work and how they should be used, there is a risk that AI will start to influence their thinking and other processes.

It is also important that offenders have a basic understanding of AI as these tools are used in matters relevant to their sentence, judicial processes and their rights as citizens. For example, in Finland, efforts have been made to increase staff and offenders’ digital skills and AI awareness.

What should prison system administrators be particularly aware

of when making decisions about whether and when to implement

this type of technology in their facilities?

FM: In terms of security and privacy, it is crucial to consider cybersecurity measures when creating or using data. This is particularly important in prison and probation settings, where security cameras, surveillance equipment and digital systems are used.

Administrators need to understand the importance of only collecting and using only the strictly necessary data. In addition, they should be mindful of protecting personal data not only from internal misuse, but also from unauthorised access by third parties as well.

What kind of benefits could well-implemented and regulated AI and related digital technologies bring to prison and probation institutions?

FM: When it comes to regulating AI, many positive aspects can be introduced, such as ensuring the integration of human rights protection and the idea of rehabilitation at the core of AI development.

In terms of the advantages of using AI, I believe that if it is well designed, it can yield many benefits. One of the most important is that AI can be a great tool to inform decisions, to provide accurate information, and to help us better understand the overall picture of what is happening inside prison and probation institutions. To evaluate decisions and their consequences.

I also believe that AI can improve prison inmates’ rehabilitation by designing personalised intervention programmes and enhancing their digital skills. Ultimately, the key is to keep in mind that technology is neutral and that we have the ability to shape it to achieve our desired outcomes. If we want increased security, greater efficiency, fewer inmates in prison, or a more humane approach to criminal justice, we need to design the system with those goals in mind.

HK: We can use technology to improve our resource management in many ways.

Simple things, such as day-to-day logistics, could be automated or supported by smarter technology. We can also improve security within the prison system by using smart technology such as AI. This technology can provide proactive analysis of CCTV footage and surveillance systems, discreet monitoring of vital parameters of vulnerable inmates, and natural language processing to monitor phone calls, for example. AI can also be used to improve HR processes, such as recruitment.

However, I would like to stress that the most important use of technology in prisons is to support practitioners in their decision-making.

AI has a significant role to play from a rehabilitative perspective by helping to identify the best approach and treatment for each individual.

By embedding this type of technology into Offender Management Systems (OMS), we can facilitate the process and improve the quality of care for the inmate. It’s also important to have safeguards in place to prevent the use of AI in a repressive manner towards inmates.

PP: I believe there is a lot of potential for using AI in corrections, especially when it comes to complex issues. The power of AI lies in its processing capabilities, as it can handle large amounts of data that would be difficult or even impossible for a human to process in a short period of time.

There are three key areas where AI can be utilised in criminal justice organisations: security, offender management, and the automation and digitisation of staff workflows and processes.

Not only can this lead to cost-effectiveness but it can also aid in making better analyses and judgments that can improve results in prison and probation.

Ilina Taneva is the Secretary to the PC-CP, Council of Europe. She has been secretary to several intergovernmental committees elaborating standards in the field of penal and penitentiary law, prisons, probation and aftercare, crime prevention, and juvenile justice.

Håkan Klarin is a member of the PC-CP Scientific Experts team. He is the CIO IT-Director of the Swedish Prison and Probation Services.

Pia Puolakka is a member of the PC-CP Scientific Experts team. She is the Project Manager of the Smart Prison Project and Team Leader of the Safety, Security and Individual Coaching Team at the Prison and Probation Service in Finland.

Fernando Miró Llinares is a member of the PC-CP Scientific Experts team. He is a Criminology and Criminal Law Professor at the University Miguel Hernández of Elche, Spain.